This case study shows how the conversational redesign of EVO Assistant turned a struggling voice bot into a human, efficient, and on-brand experience, raising NPS from 5.4 to 8.2, reaching 80% annual self-service resolution, and positioning EVO Banco among the most innovative digital banks in Europe.

Company

EVO Bank

Year

2020 - 2025

My role

As a Conversation Designer, I led the definition of EVO Assistant’s voice and content strategy, collaborating with linguists, engineers, marketing, and customer success teams. My work focused on three key goals:

Humanize the experience: craft an empathetic, clear, and brand-aligned tone.

Optimize understanding: improve NLU intents and flows using real data.

Enhance comprehension: implement a LLM-NLU architecture to boost understanding and contextual responses.

Reduce friction and costs: simplify journeys, minimize transfers, and increase self-service resolution.

EVO Assistant

The Challenge

-

For EVO Bank

EVO Banco was struggling to deliver a consistent and efficient digital experience through its voice assistant. With a Net Promoter Score of 5.4, low intent recognition, and high call transfers to human agents, operational costs were rising while customer trust was declining. The bank needed to turn EVO Assistant into a reliable, on-brand, and cost-efficient self-service channel.

-

For the clients

Users couldn’t complete their operations through the assistant, often faced long waiting times in the contact center, and experienced frustration and inconsistency in the overall experience. What was meant to be a quick, simple, and human digital interaction had turned into a source of friction.

-

-

Both the bank and its customers needed a conversational experience that truly worked, one that felt natural, solved problems effortlessly, and reflected EVO’s promise of a smarter, more intuitive digital bank.

The design process

Before GEN AI

Phase 1

1.1 Understand the user

The first and most important step was to empathize with our users and understand who was actually talking to our assistant.

We analyzed user profiles, and real conversation logs to identify expectations, and communication patterns. This research helped define how the assistant should sound and behave in each context, setting the foundation for a voice and tone that truly resonated with EVO’s users.

Findings

Our main audience ranged from 30 to 45 years old, followed by younger users 18 to 29 and a smaller group of over 50. All digitally savvy and expecting quick, friendly interactions.

Pains

EVO assistant’s tone and persona, was too formal and technical, using language that didn’t match users’ everyday vocabulary.

Responses often ended abruptly without guiding users toward completing their task.

EVO assistant failed to acknowledge user’s emotion like frustration when an error happened or celebrate when an operation was successful.

Solution

We created tone and voice guidelines that defined how EVO Assistant should communicate:

Use simpler, conversational language instead of jargon.

Acknowledge emotions and provide clear next steps.

Adapt tone based on the channel, the user’s level of knowledge, and the context of the interaction.

These guidelines became the foundation for a more natural, consistent, and user-centred conversational experience.

1.2 Understand the team

As equally as important as understanding our users, it was to understand how the team worked behind the assistant.

Early in the research phase, I identified a key operational gap: there was no centralized documentation for conversational designs.

Findings

Pains:

Lack of a single source of truth: decisions, iterations, and validations were scattered across chat threads and personal folders.

Inconsistent updates: without documentation, past decisions were lost or repeated, making it difficult to track progress.

Slow onboarding: new team members had no visibility into previous work or next steps.

Solution

Establish a shared documentation system where the team could store, review and update:

Conversation flows, intents and entities.

Tone and voice guidelines.

Design decisions and the reasons behind them.

This centralized workspace improved collaboration across linguists, software engineers, customer success, and marketing teams, and created a scalable foundation for the assistant’s continuous evolution.

Phase 2

2.1 Review EVO Assistant’s conversational flows

After identifying who was using the assistant, the next step was to understand how they were interacting with it.

I analyzed thousands of real conversations and key performance metrics to identify the most common and high-impact use cases. Applying an 80/20 approach allowed us to focus on the flows that concentrated the majority of user needs and had the biggest potential to improve satisfaction and reduce operational costs.

Findings

The analysis revealed several issues across the existing flows:

Generic questions led to confusion: the assistant lacked proper disambiguation prompts, so broad or unclear requests often produced irrelevant answers.

No error handling strategy: conversations frequently reached dead ends with no way to guide users back into the happy path.

Missing handovers: the assistant didn’t reliably detect moments when human assistance was required, prolonging failures and frustration.

Underused services and data: although the bank had transactional APIs and contextual data available, the assistant wasn’t leveraging them.

-

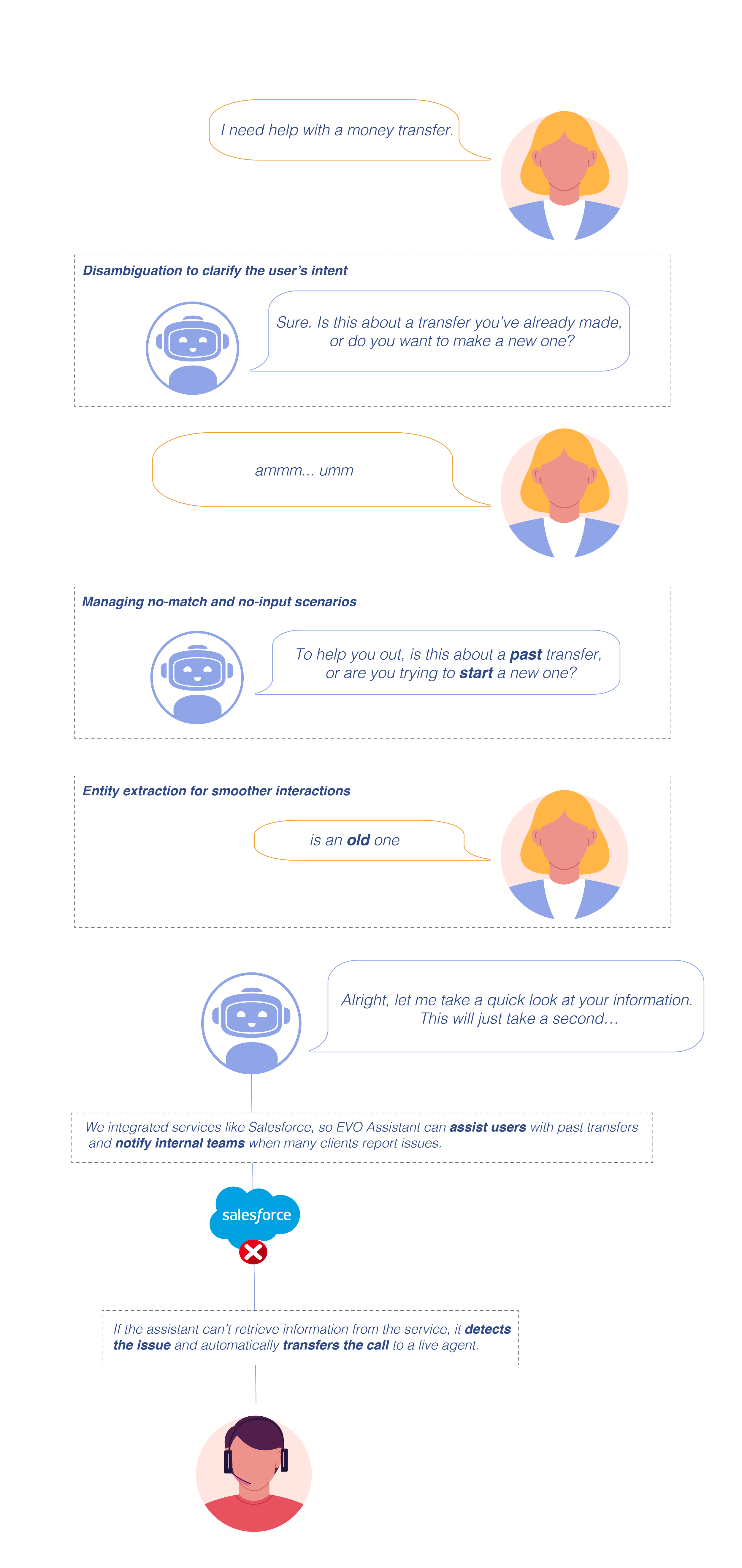

User intent often appears in ambiguous forms, and when the assistant proceeds without confirming it, friction and loss of trust follow. Ensuring that users feel understood is a fundamental part of any conversational experience.

With that in mind, we implemented disambiguation strategies to clarify intent upfront and avoid wrong turns in the conversation.

-

Conversations can get messy, they don’t always follow a straight line. So when a no-match or no-input happened, we added recovery points at key moments to avoid drop-offs and guide users back on track.

-

What happens when the assistant can’t access a service?

What should we do when a user has a complex issue?

How should we respond when someone explicitly asks to speak to a person?

We asked these questions and worked hand in hand with the Customer Support team to define clear handover rules that ensure a smooth escalation to human agents whenever needed.

-

Users don’t want generic answers they want the assistant to do things for them.

By integrating available transactional services and contextual data, we turned static responses into real actions the assistant could perform (like retrieving balances, blocking cards, or checking past transfers).

-

To handle the complexity of real conversations, we strengthened the assistant’s logic with better prompts, sharper intent definitions, and more reliable entity extraction, ensuring each interaction matched both user behavior and system capabilities.

Solution

To address these issues, we redesigned the core flows with a focus on clarity, usability, and meeting our clients’ needs.

We worked closely with data, software engineering, and business teams to understand the problems behind each interaction and rebuild the experience with a user-centered approach.

In short, the assistant wasn’t guiding users effectively, couldn’t recover when conversations went off track, and wasn’t using the bank’s capabilities to provide smarter, more useful, and meaningful support.

Together, these improvements made conversations more predictable, helpful, and far better aligned with what users expected, while supporting the bank’s operational and business goals.

2.2 Review the team’s workflow

Once we understood how users interacted with the assistant, we also needed to understand how the team worked behind it. Reviewing the internal workflow was essential to improving consistency, quality, and the ability to scale new conversational designs.

Analyzed how the engineering, QA, data, and business teams collaborated during each release cycle to identify gaps, blockers, and missed opportunities that were affecting the assistant’s overall performance.

Findings

The analysis revealed several issues across the existing workflow:

Reactive testing: automated tests were used mainly to fix bugs after going live, instead of preventing them before deployment.

No documented test cases: there was no shared list of scenarios or flows to validate before each release.

Missing quality checkpoints: without a clear review process, critical errors reached production and affected the customer experience.

Limited communication with metrics and business: analysts only flagged urgent issues, and there was no open channel to review new flows or report findings after each release.

Delayed business updates: new app features were sometimes communicated days after launch, leaving the assistant unable to answer users’ questions about them.

Solutions

We moved from reactive fixes to a preventative workflow.

Making testing smarter, not harder

Create a clear testing baseline before and after each release to monitor flows and protect critical paths.

Catching issues before users do

Clear review workflow and mandatory approvals to prevent errors from reaching production.

Turning silos into shared insight

Established regular cross-team reviews with shared dashboards to track releases, evaluate new flows, and surface insights.

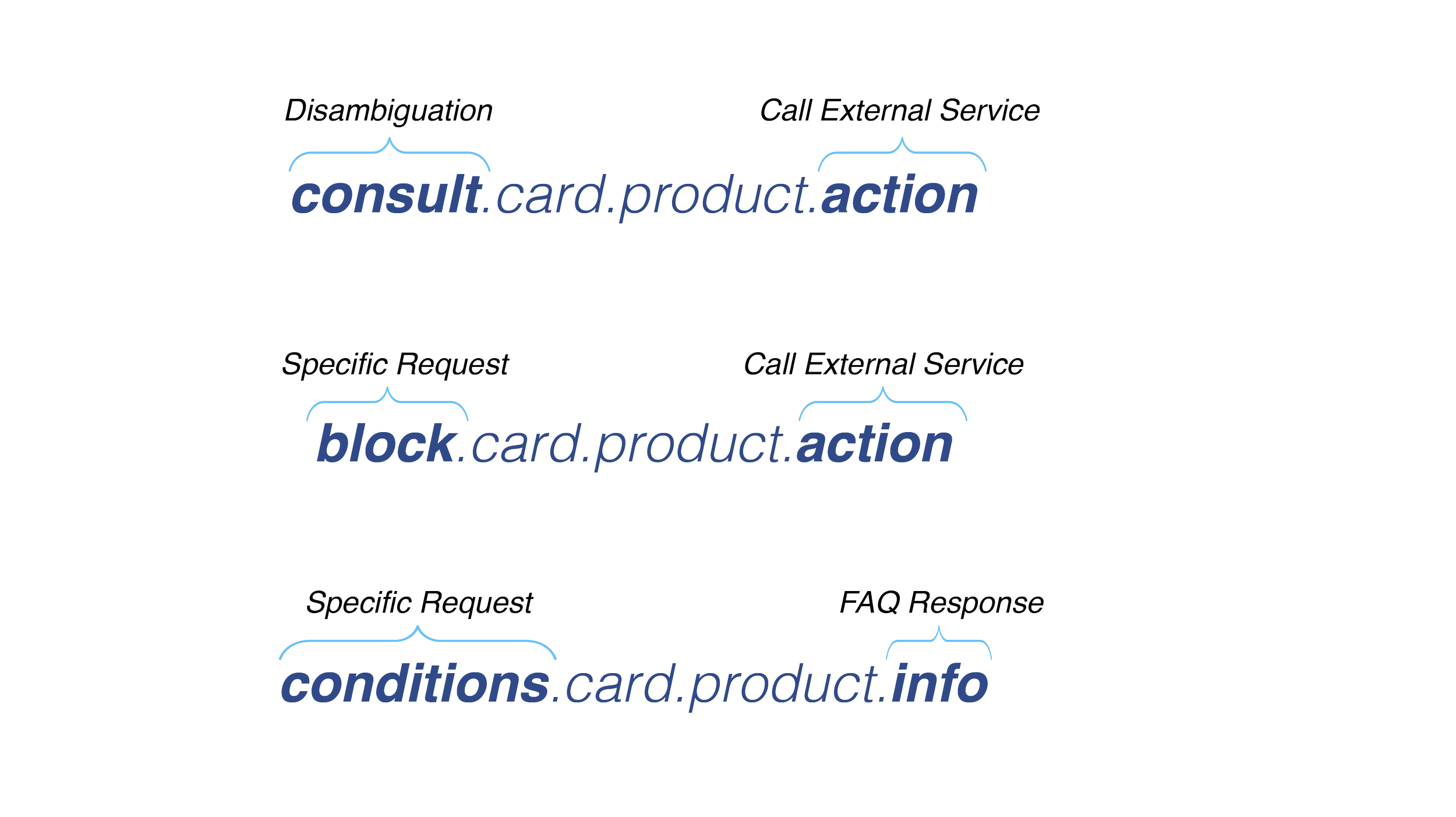

Taxonomy that simply testing

We built a clear intent taxonomy so the team could quickly identify whether a request needed disambiguation, an action, or a simple FAQ. This improved flow consistency, NLU accuracy, and overall user experience.

How EVO Assistant handled real conversations

Clear intent creates a clear path

The assistant doesn’t just guess what the user means. Through disambiguation, it understands the real need behind each request.

From intent to action

The assistant not only understands the user’s intent, it can also navigate directly to the right screen in the app, allowing clients to resolve their needs quickly and without friction.

Escalate when it matters

Some cases need a human. We identified those scenarios and designed a smooth escalation path. When detected, the assistant simply schedules a call with a real agent.

Just when we had clarity, alignment, and solid designs, Gen AI changed everything.

The design process

From NLU to LLMs: A New Era

Phase 3

Adding an LLM Layer

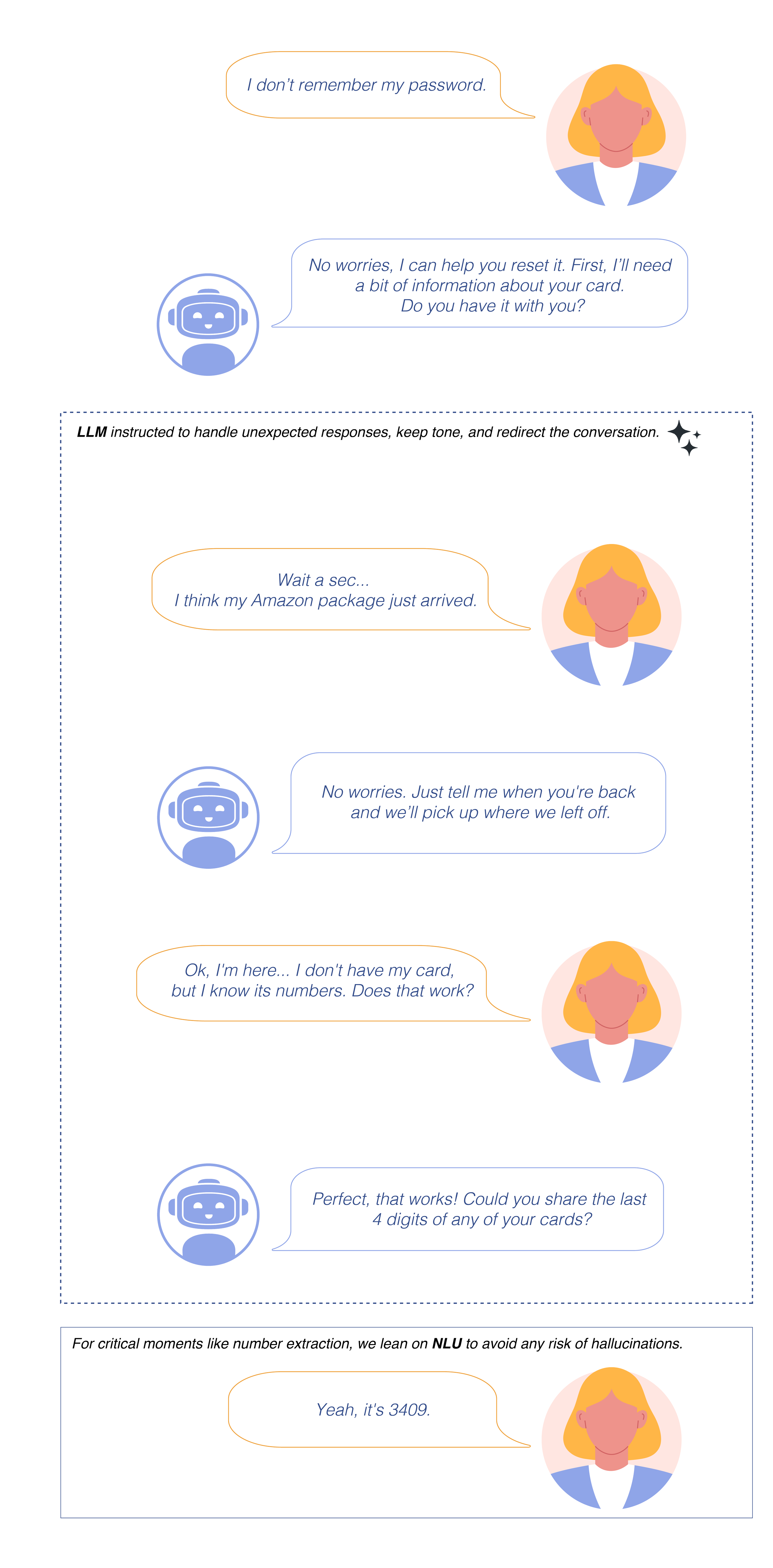

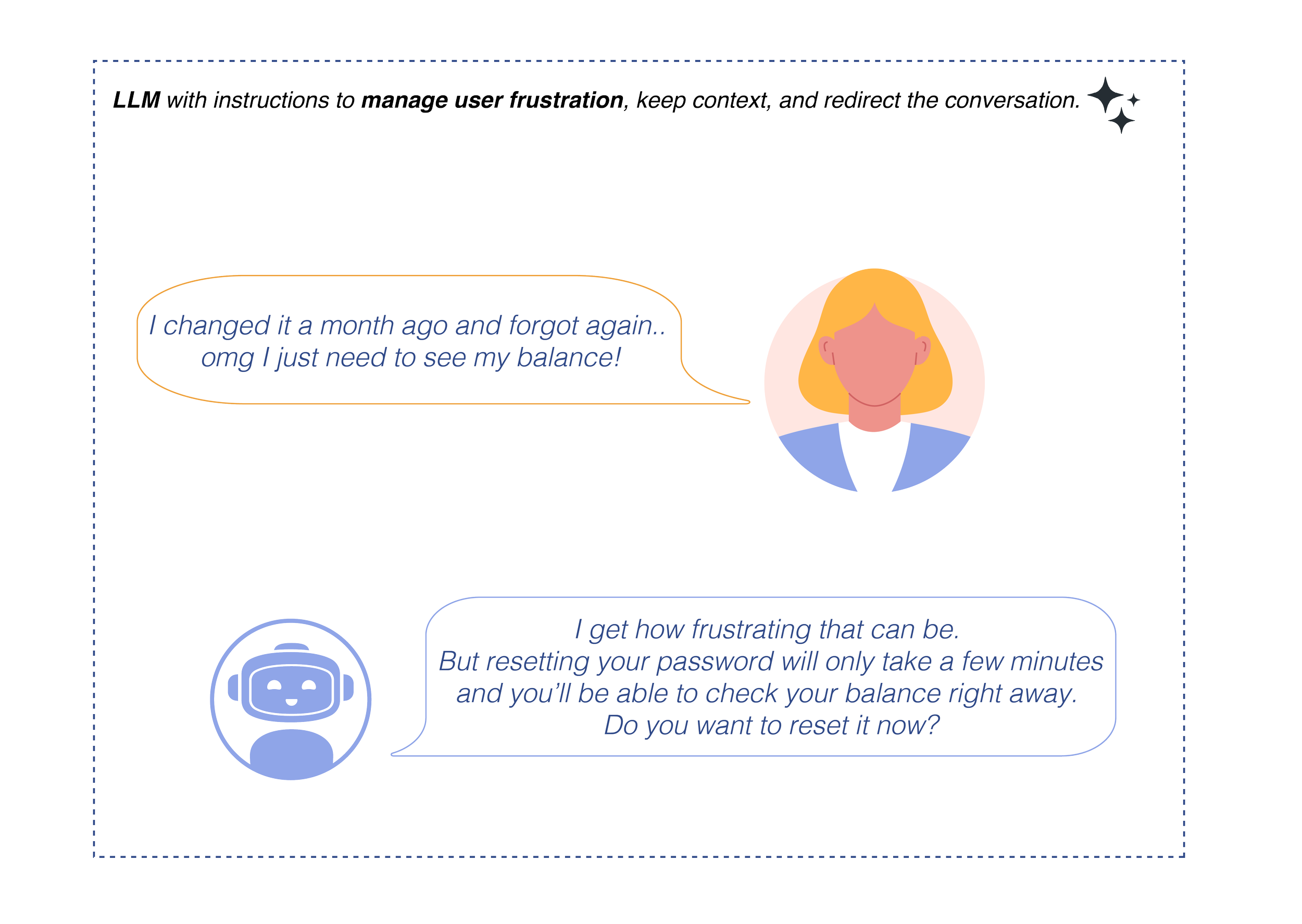

Before integrating GenAI into EVO Assistant, we explored how LLMs could meaningfully improve conversation management. Because the technology was so new, we focused on real, measurable benefits (not hype) to enhance the experience without breaking it.

Findings

User Experience Issues

Generic and emotionally rigid responses: Static prompts failed to adapt to context or emotion, increasing friction and breaking conversational flow.

Disambiguation gaps: Complex and multi-intent requests were difficult for the NLU to interpret accurately.

High rate of false negatives: Many valid requests were classified as “no match,” triggering unnecessary handovers and breaking the flow.

Business Impact Issues

Loss of insights: NLU missed emotional signals and product context, limiting visibility into user needs and opportunities.

Unnecessary transfers: false negatives and poor interpretation increased tcall-center load and costs by sending calls to agents the assistant could have handled.

Solution

To address these challenges, we designed and implemented an LLM-powered layer that complemented (not replaced) the existing NLU and flow logic.

We focused on:

Using the LLM for comprehension only (not free-form decisions), ensuring safety and predictability.

Achieving more accurate intent routing through assisted interpretation and entity extraction.

Improving disambiguation by better understanding vague or multi-intent messages.

Enhancing fallback behavior by reinterpreting unclear inputs instead of triggering generic error messages immediately.

Keeping control through guardrails, ensuring every output followed structure, boundaries, and brand tone.

This hybrid architecture allowed EVO Assistant to stay consistent, safe, and predictable while becoming significantly more intelligent.

Conversations stopped being purely transactional. The assistant could now acknowledge emotions, adapt its tone, and respond in a way that felt more human.

From Conversations to Business Strategy

Integrated an LLM to process cross-flow feedback, transforming raw user comments into actionable insights to uncover blind spots and prioritize experience improvements.

Here are the key reasons the LLM identified when clients closed their accounts:

One insight stood out clearly: most clients were leaving because they switched to another bank.

We worked closely with Customer Success to uncover why, refocus on retention, and design targeted strategies to close experience gaps.

Designing for measurable impact

The assistant evolved into a strategic brand asset, reinforcing EVO’s as one of the most innovative digital banks in Spain.

Awards & Recognitions

World Finance Banking awards

Most Innovative Bank in Europe

Global Finance Banking awards

Best Consumer Digital Bank in Spain for 2022

2022

World Finance Banking awards

Most Innovative Bank in Europe

2020